The (not so) hidden challenge of bias

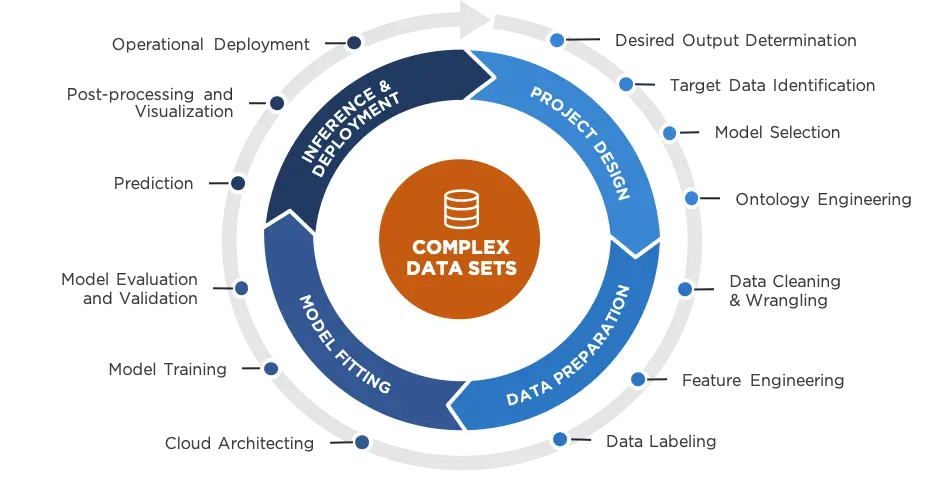

Throughout the Hidden Challenges in Machine Learning Lifecycle series, we moved quadrant by quadrant through the process of designing data science solutions. In each phase, from Project Design to Data Preparation, from Model Fitting to Inference, our data scientists iterate on a variety of algorithms for purposes as disparate as their architectures are unique (Siamese NNs, LSTM models, and NARX models to name a few). The execution of each phase in the cycle is influenced by human decision-making and prediction is distilled from the vapors of data-driven complexity.

Some might counter that despite the potentiality for these errors, machines still have a greater capacity for unbiased decision-making than humans. Indeed, a chief utility of ML is that algorithms can discover patterns that humans cannot. Nonetheless, it’s unwise to assume that those patterns are comprehensive (as the datasets used for training may not have been entirely representative) or infallible (as the scientist that trained the model may have introduced unknown biases).

Others, like Gerd Gigerenzer, might equate biases to heuristics that are more helpful than harmful.[2] Heuristics are mental shortcuts that allow humans to assimilate perceived reality and quickly make decisions based on sensory stimulus—when the lion roars, you run in the opposite direction. Heuristics are also culprits in many social and moral dilemmas of the modern age such as prejudice and profiling. Essentially, the brain sees a pattern it recognizes—a familiar pathway that has been fired on many times previously (i.e., Hebbian Plasticity), so it stops processing the current environment, skipping directly ahead to the predicted (and familiar) outcome.[3]

In computer science, heuristic algorithms trade prediction accuracy, precision, and completeness for speed, optimizing for approximation methods and output to alleviate the computational intensity of finding the exact answer. You solve for a probability range instead of a precise value, like the answer to a Fermi question.[4] While some heuristics can be good for humans (life-saving in the case of the roaring lion), these mental shortcuts are less necessary in machines given that we have access to the scalable compute power to tackle almost any data science challenge.

What to do about biases in algorithms

Having determined that algorithms stem from two invariable sources, the engineers that train them and the datasets on which they are trained, how can we approach the data and the scientists to ensure the ‘data science’ remains truly objective? For the solution, we turn to the principle underlying the plethora of bias mitigation strategies in human decision-making: Get more Information. Teammates. Perspective. Data.

You likely have heard that the best teams are interdisciplinary.[5] You probably have heard that one of the best ways to ease reliance on one potentially biased opinion is to analyze the situation through someone else’s view, or to role-play—more perspectives (IARPA has certainly heard of it).[6][7] And you likely know that the way to avoid an unrepresentative sample is to ensure the collection of a truly representative dataset—more data.[8]

So how do we cram more information, teammates, perspectives, and data into the logic of our algorithms?

Representative data: a must

Think of data like AI food. If the model only eats McDonald’s, it will not produce very good results (resisting the urge to quote every data scientist’s favorite adage). Similarly, if the model only eats spinach, it also will not produce very good results, even though spinach is healthy and should be consumed daily. The spinach model will be getting a TON of good input but lacking equally necessary components like protein.

The point is that each ML model is trained on an input. That input needs to be varied enough (and representative enough) that it teaches the model everything it needs to know about the target ontology. If the model needs to identify drinking vessels and the training data only ever contain coffee mugs, how can we expect the model to accurately identify stemware? This problem is one that data scientists refer to as class imbalance. Remedying class imbalance seems simple enough. Get more training data (or if you can’t get it, consider generating it synthetically). But we all know that isn’t always possible. If all else fails, ask Jason Brownlee.

Sampling bias is not a new problem

Researchers have been grappling with sampling bias since the Statistical Society of London pioneered the Questionnaire in 1838.[9] What is new is the technology within which the phenomenon of sampling bias (i.e., Tversky and Kahneman’s ‘Representativeness’) is manifest (human society has an uncanny way of rehashing age-old problem sets).[10] What is lacking is a systematic study of how sampling bias may or may not affect various model architectures.

Consider the stemware and coffee mug example. Because algorithms are capable of making connections that humans cannot, it may very well be able to make the leap from coffee mugs to stemware. The challenge is, we have no way of knowing if it can. Nor do we have a way of knowing how the algorithm makes that leap, even if it is successful. Hopefully, DARPA’s XAI program will offer some insight.[11]

In short, to mitigate sampling bias:

- Ensure your training data is representative of the target ontology

- If it isn’t, get more data

- Consider synthetic data generation if getting more data is not an option

- If a class imbalance occurs only in the training set, consider re-sampling the training set

- If a class imbalance occurs throughout the entire dataset, consider training individual models on individual classes, then combining results for heightened performance

All the focus is on the data science, but it should really just be on the data. Many organizations are trying to churn out 11-course meals worthy of a Michelin star without going to the supermarket first. We’ve got to get the data piece right. So at NT Concepts, we are taking a hard look at other, less-sexy aspects of data science, like data provenance analytics, data and database architectures in support of solid science, and the newest hardware capable of powering all the data munging inherent in this AI/ML business.

Collaborative intelligence: take two

There is ample research on the importance and process of creating productive teams.[12] Researchers have assessed everything from gender roles, to experience levels, to training in specific disciplines and hedgehog predilections.[13],[14] All the research points to the fact that there is, indeed, an ideal balance between the elements in a team, especially when forecasting, prediction, or creativity is the team’s primary objective.

We readily accept the principle that one person cannot do everything. We benefit from the contributions of colleagues. So why is it that we expect a lone algorithm to solve a massive, complex problem?

Like humans, algorithms are likely to be able to perform a fairly straightforward task pretty well. They may even be able to perform very complex tasks quite well, like beating Lee Sedol at Go, a feat 99% of Americans could not accomplish.[15] Going back again to our stemware and mug analogy—it’s likely we could train an algorithm to classify images of coffee mugs and stemware with near-perfect accuracy. But two things will eventually break the model:

- Humans will invent a new style of coffee mug that the model has never seen before.

- Someone will try the model on teacups and saucers and proclaim it an inconceivable failure of modern technology when the label “coffee mug” appears on screen.

The first event tells us that algorithms are not permanent. They, like humans, benefit from continuous learning. The second event illustrates that to be truly useful in any modern dining scenario, the algorithm needs a couple of different models—at the very least, one that knows coffee and one that knows tea.

The meaningful combination of multiple algorithms diminishes the chance that any one model will exert undue influence on the overall results. Such an AI ecosystem takes traditional ensemble modeling approaches a step further. A truly successful AI ecosystem may have some automated model management; a way to route data through extant algorithms to both maximize and de-bias the output solutions. It may also calculate ecosystem-level metrics (such as degree of model consensus in output or standard deviation away from this consensus) in addition to model-level metrics (accuracy, rate of convergence, etc.).

This ecosystem for the meaningful combination of algorithms towards an expressed goal is where we believe the future of AI/ML must go. The ecosystem will serve as a check and balance on individual model output, producing a more comprehensive, less biased result. This result will be born from the additional perspectives of varied models. It will also provide an infrastructure for continuous learning, updating, and training. This infrastructure will rely on a constant stream of incoming data sources (e.g., citizen science) and a solid data architecture with robust (and configurable) ETL processes.

It really is all about data

Since Kahneman and Tversky began exploring the fallibility of the human intellect in the 1940s, the challenges and mitigation strategies they uncovered remain with us today. More than that, they are as applicable to our modern technology environment as they were for the basic analyst training in the previous decade.

While not as biased as human decision making, algorithms are not impervious. Humans are part of the ML Lifecycle this blog series has sought to explore. The ML model takes after its grand architect (people) when it comes to judgment and decision errors. And through the exploration of this interplay of people and machines, what we come to know absolutely is that the data matters and that models, like humans, produce a better result when they work together.

References

[1]

- Richey, Melonie K. “The Intelligence Analyst’s Guide to Cognitive Bias.” The Intelligence Analyst’s Guide to Cognitive Bias, LuLu, 2014.

- Richey, Melonie K. “Reduce Bias In Analysis: Why Should We Care? (Or: The Effects Of Evidence Weighting On Cognitive Bias And Forecasting Accuracy)”, sourcesandmethods.blogspot.com/2014/03/reduce-bias-in-analysis-why-should-we.html.

- Richey, Melonie K. “Your New Favorite Analytic Methodology: Structured Role Playing.” Your New Favorite Analytic Methodology: Structured Role Playing, 13 July 2013, sourcesandmethods.blogspot.com/2013/07/your-new-favorite-analytic-methodology.html.

[2] Fox, Justin. “Instinct Can Beat Analytical Thinking.” Harvard Business Review, 7 Sept. 2017, hbr.org/2014/06/instinct-can-beat-analytical-thinking.

[3] “Hebbian Theory.” Wikipedia, Wikimedia Foundation, 5 Mar. 2019, en.wikipedia.org/wiki/Hebbian_theory.

[4] Richey, Melonie K. “Orienting The Intelligence Requirement: The Zooming Technique.” Orienting The Intelligence Requirement: The Zooming Technique, sourcesandmethods.blogspot.com/2013/09/orienting-intelligence-requirement.html.

[5] Hackman, J. Richard. “Collaborative Intelligence: Using Teams to Solve Hard Problems.” Amazon, Amazon, 16 May 2011, www.amazon.com/Collaborative-Intelligence-Using-Teams-Problems/dp/1605099902.

[6] Green, Kesten C. Forecasting Decisions in Conflict Situations: a Comparison of Game Theory, Role-Playing, and Unaided Judgement. Https://Www.journals.elsevier.com/International-Journal-of-Forecasting, www.forecastingprinciples.com/files/pdf/Greenforecastinginconflict.pdf.

[7] IARPA Serious Research Program

[8] Crawford, Kate. “The Hidden Biases in Big Data.” Harvard Business Review, 16 Jan. 2018, hbr.org/2013/04/the-hidden-biases-in-big-data.

[9] Robert H. Gault (1907) A History of the Questionnaire Method of Research in Psychology, The Pedagogical Seminary, 14:3, 366-383, DOI: 10.1080/08919402.1907.10532551.

[10] Tversky, Amos, and Daniel Kahneman. “Judgment under Uncertainty: Heuristics and Biases.” Judgment under Uncertainty, pp. 3–20., doi:10.1017/cbo9780511809477.002., Amos Tversky and Daniel Kahneman, Science New Series, Vol. 185, No. 4157 (Sep. 27, 1974), pp. 1124-1131.

[11] Gunning, David. “Defense Advanced Research Projects Agency.” Defense Advanced Research Projects Agency, DARPA, www.darpa.mil/program/explainable-artificial-intelligence.

[12] Richey, Melonie K. “Seven Secrets To Creating A Cohesive Team.” Seven Secrets To Creating A Cohesive Team, Sources and Methods, 21 Aug. 2013, sourcesandmethods.blogspot.com/2013/08/seven-secrets-to-creating-cohesive-team.html.

[13] Gottberg, Kathy. “Are You A Hedgehog or a Fox-And Why Should You Care?” SMART Living 365, 4 July 2018, www.smartliving365.com/are-you-a-hedgehog-or-a-fox-and-why-should-you-care/.

[14] Tetlock, Philip E. Expert Political Judgment: How Good Is It? How Can We Know? Princeton University Press, 2017.

[15] Metz, Cade. “https://www.wired.com/2016/03/Two-Moves-Alphago-Lee-Sedol-Redefined-Future/.” WIRED, 16 Mar. 2016, www.wired.com/2016/03/two-moves-alphago-lee-sedol-redefined-future/.

[16] Vlasits, Anna. “Drones Are Turning Civilians into an Air Force of Citizen Scientists by Anna Vlasits.” WIRED, 17 Feb. 2017, www.wired.com/2017/02/drones-turning-civilians-air-force-citizen-scientists/.