Artificial intelligence (AI) system development involves complex processes and requires coordination across large and diverse engineering teams. Organizations are increasingly adopting digital engineering tools and methodologies to maximize efficiency and collaboration. These tools help manage the end-to-end AI lifecycle, integrate workflows, centralize knowledge, and align objectives across stakeholders.

Several categories of digital engineering tools are particularly impactful for AI development.

Data Management Tools

The fuel for AI systems is data. Data management tools like data version control systems, data catalogs, data lakes, and data warehouses are pivotal for managing, governing, and sharing the huge datasets required for AI.

- Data versioning with tools like Data Version Control (DVC), Git LFS, neptune, and Pachyderm tracks changes to datasets just like code versioning, significantly improving reproducibility and collaboration when multiple people interact with the data.

- Data catalogs such as Collabra provide a searchable inventory of available datasets along with metadata like data definitions, ownership, lineage, and appropriate usage. Catalogs make finding and understanding data assets easier across the organization.

- Lakes and warehouses consolidate data from disparate systems into a central, curated, and secured storage layer, making data access much simpler for developers and data scientists across teams.

Toolbox Spotlight: Data Version Control (DVC)

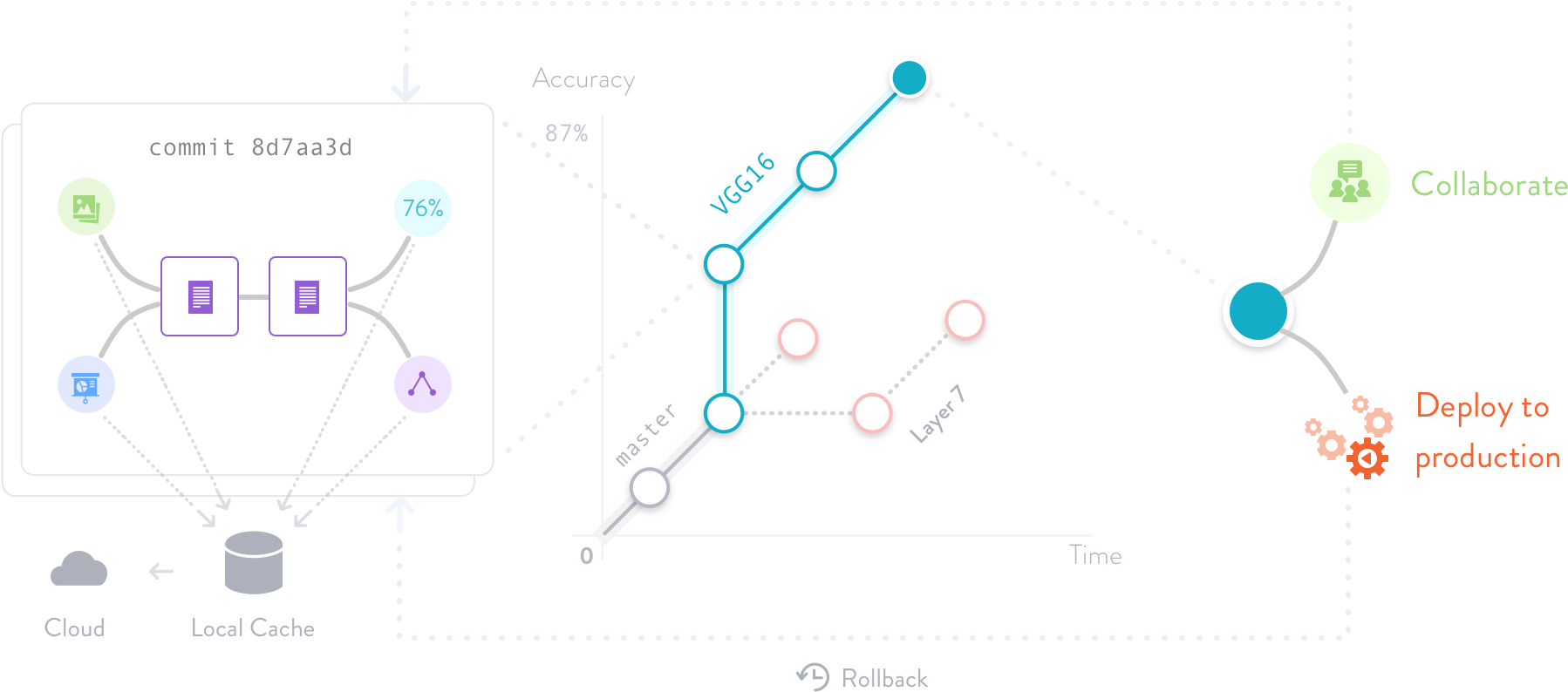

DVC is an open-source tool for data versioning and pipeline management. Information about DVC is primarily drawn from the project’s official documentation and GitHub repository. DVC, analogous to Git for code, is designed specifically for versioning large datasets and machine learning (ML) models, analogous to Git for code.

DVC Benefits:

- DVC manages data through lightweight metadata files instead of tracking actual data in Git like LFS.

- Data lives remotely while metadata tracks provenance.

- DVC codifies data pipelines called “DVC pipelines” to automate processing steps, while allowing reusability and reproducibility.

- DVC optimization enhances data science and MLOps workflows by making data shareable, reproducible, and versioned alongside code.

- DVC.org Documentation – Comprehensive guides and API reference for using DVC

- DVC GitHub Repo – Source code and additional usage examples and tutorials

Version Control and Code Collaboration Platforms

Robust version control and code collaboration platforms like Git, GitHub, GitLab, and Bitbucket are essential for efficient AI engineering. These tools allow developers to track code changes, collaborate on projects, maintain revision histories, implement permissions and access controls, and integrate with other software development lifecycle tools.

For decentralized or distributed teams, version control enables seamless code sharing and collaboration—regardless of location. Teams can work in parallel on features, fix bugs, and merge changes with complete transparency. Platforms like GitHub and GitLab also include project tracking, code reviews, documentation, and other capabilities to improve team coordination further.

Model Registry and Model Operations Tools

AI models are the core products of the development process. Model registry and model operations (ModelOps) platforms manage models through their lifecycle of development, testing, production, monitoring, and governance.

- Registry tools like MLflow Model Registry and Seldon Core catalog model artifacts, weights, configurations, and metadata in a central repository, improving model discovery, understanding, and reuse across projects and teams.

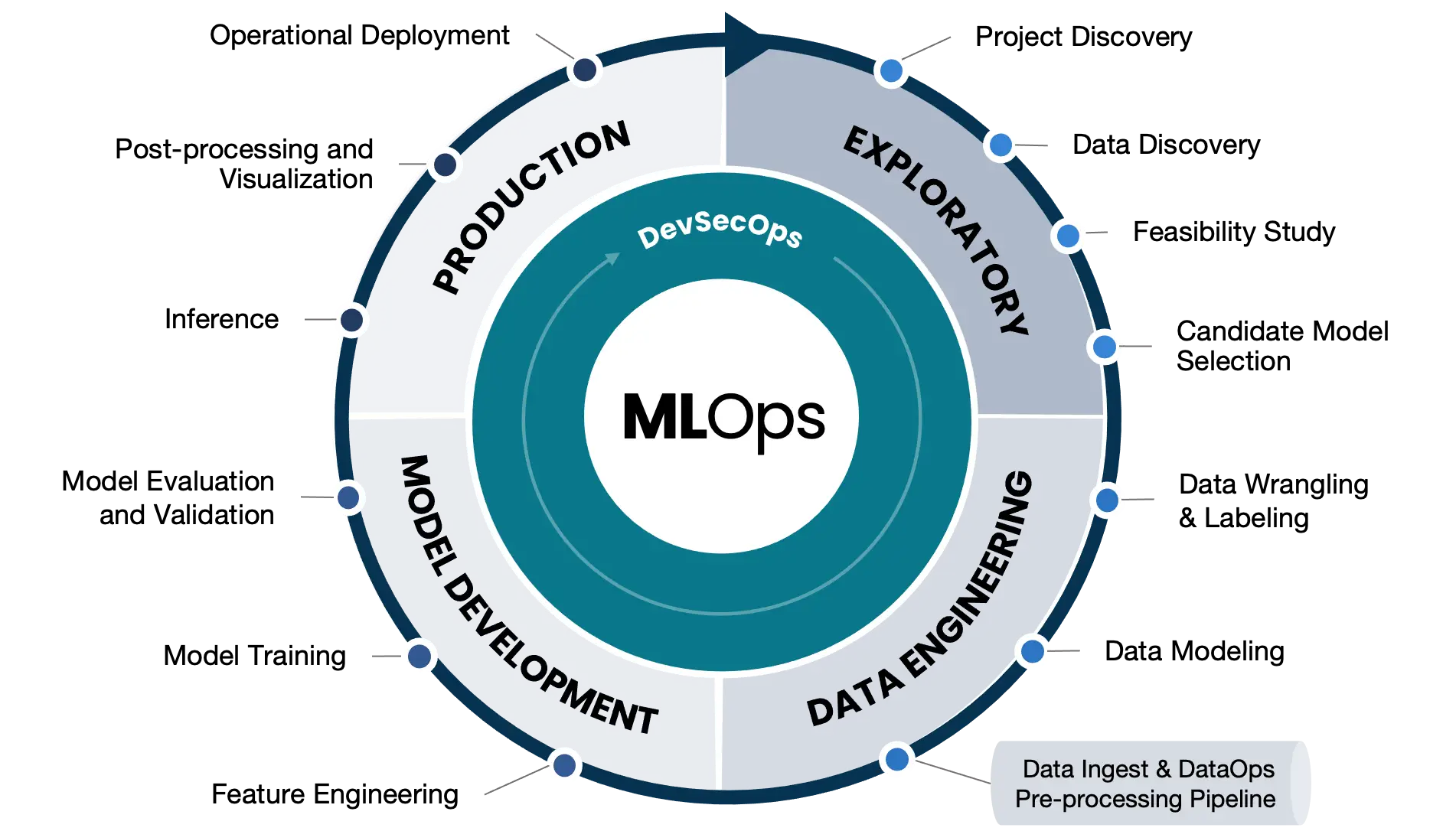

- ModelOps tools like ArgoFlow and TensorFlow iterate upon and track model lifecycle by orchestrating the steps of building, validating, deploying, monitoring, and updating models. ModelOps provides structure and DevSecOps-style automation to model development workflows.

Integrated Development Environments

Rather than development teams piecing together disjointed digital engineering tools, integrated development environments (IDEs) like Databricks and Equitus Graph Analytics provide an all-in-one platform with built-in capabilities for version control, data management, model registry, collaboration, and more.

Some advantages of integrated platforms are easier data product team onboarding, smoother interoperability between features, and a unified interface. However, IDEs provide less flexibility compared to a customized software toolchain, especially when working with unique mission data problems in classified environments.

Workflow Orchestration and Pipelines

Workflow orchestration streamlines the end-to-end development process by chaining tools and tasks into automated pipelines, improving efficiency, standardization, and reliability of workflows.

Popular AI/ML workflow orchestrators include Prefect, Argo, Apache Airflow, Kubeflow Pipelines, and MLRun. These tools leverage containers and Kubernetes to declaratively define pipelines, and then handle executing the sequence of steps.

Pipelines encapsulate processes like data preparation, feature engineering, model training, evaluation, and deployment as reusable components. This structure accelerates development and improves consistency across projects. Below are the NT Concepts MLOps lifecycle steps that outline our approach to ML Operations (MLOps).

Interactive Development Environments

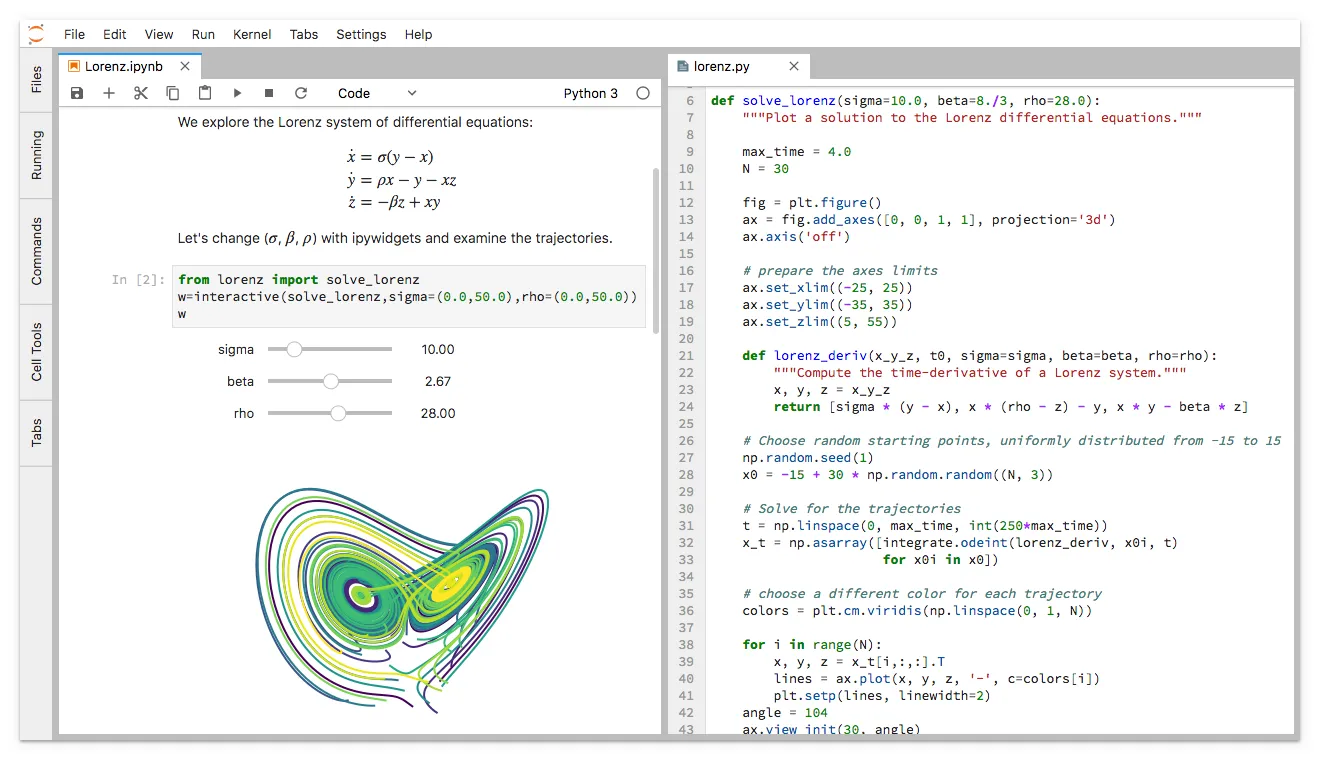

Several tools provide hosted Jupyter notebook-style environments for interactive development with live code, visualizations, and documentation. Example platforms are Google Colab, AWS SageMaker Studio Lab, and Microsoft Azure Data Studio.

These notebooks help teams demonstrate concepts, discuss ideas, and share reusable code snippets. The mix of text, code, and media improves understanding and collaboration compared to traditional documents or isolated code.

Project Tracking and Ticketing Systems

Platforms like Jira, Trello, Monday, and GitHub Issues provide ways for teams to coordinate through project boards, tickets, dependencies, notifications, and conversations.

These systems provide an overview of team priorities, responsibilities, and progress. Shared ticketing systems also funnel collaboration and knowledge exchange through a structured system rather than fragmented emails and messages.

Communication Tools

Instant messaging, video chat, screen sharing, online whiteboards and team sites all facilitate rapid communication essential for dynamic teamwork. Slack, MatterMost, Microsoft Teams, Zoom, and Confluence are popular platforms with customizable channels and integrations tailored to AI development teams.

Documentation & Visualization Tools

Clear documentation is critical for onboarding, sharing institutional knowledge, and compliance. Tools like Confluence, Read the Docs, and GitHub Wikis simplify creating, organizing, maintaining, and accessing team documentation.

To share AI visualizations and rapid prototyping, many teams developing AI solutions use Jupyter Notebooks. Here are some key ways Jupyter notebooks and Jupyter Hub enable efficient AI engineering:

- Interactive Coding: Jupyter provides an interactive Python, R, or other notebook for developing code, visualizations, and documentation together. This interactivity supports rapid prototyping and iterating for AI modeling.

- Visualizations: Notebooks allow engineers to visualize and better understand data, models, and results right alongside code using plots, graphs, and other media.

- Documentation: Notebooks integrate code, equations, visuals, and text commentary to document experiments, explain concepts, and track progress.

- Collaboration: Notebooks can be easily shared between team members or the community to distribute knowledge and collaborate.

- Rapid Experimentation: The interactive notebook format makes it fast and simple to try new models or data workflows through rapid prototyping.

- Reproducibility: Notebooks create a literate programming record that encapsulates code, data, results and context to ensure reproducibility.

- Modularity: Notebooks allow segmentation of code into logical sections and cells that can be rearranged or repurposed easily.

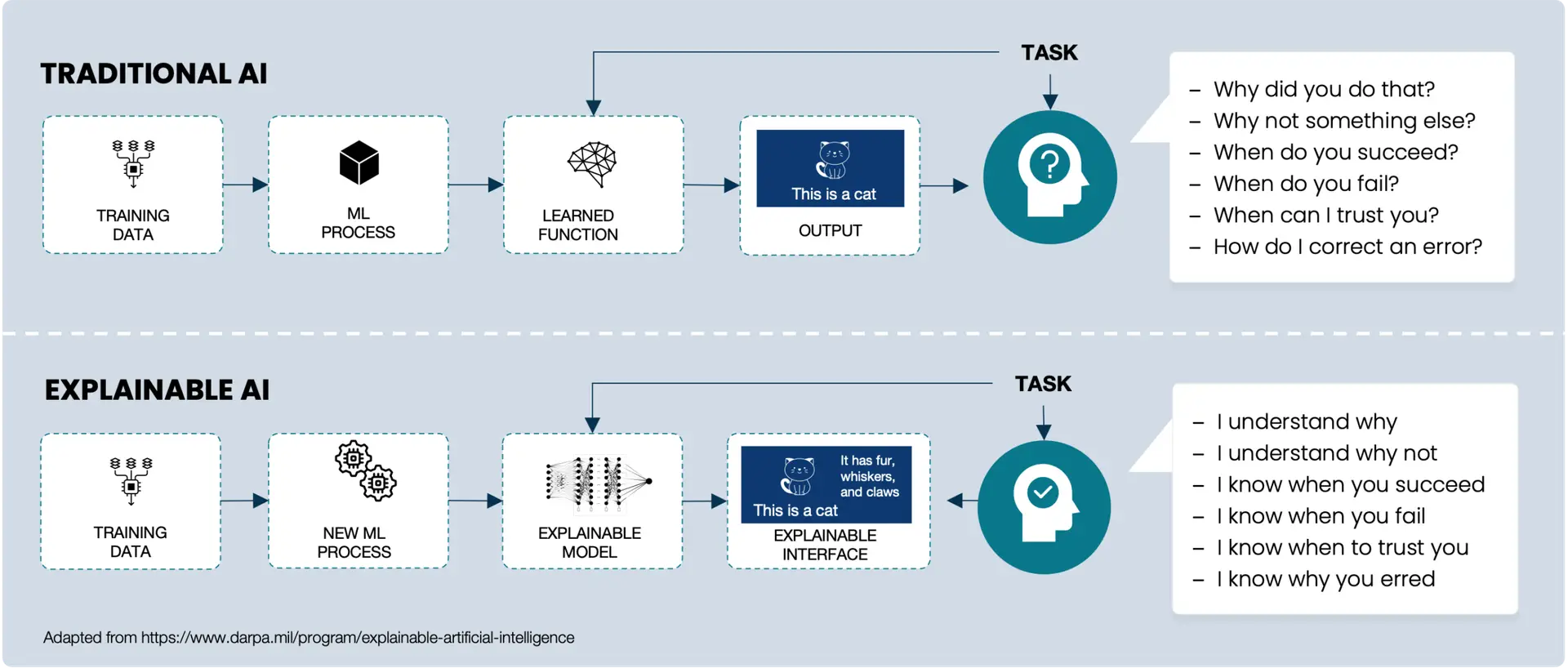

Beyond visualizing, many stakeholders in governing and accountability roles want something called “Explainable AI,” which is a newer phrase that aims to understand how AI came to a specific conclusion.

While human understanding is ultimately the goal of thorough documentation and visualization, many organizations are using digital engineering tools such as MATLAB to help interpret and break down the reasoning.

To learn more, check out this 2019 article where Johanna Pingel discusses Explainable AI at a Mathworks Research Summit.

Additional Benefits of Digital Engineering Tools for AI

In addition to the categories above, shared digital tools provide other benefits through built-in security, access controls, auditing, automation, and integration capabilities. Teams rely less on informal documentation, scripts, tribal knowledge, and manual oversight.

The use of centralized platforms, structured workflows, and automation frees teams to focus on higher value creative and strategic aspects of AI development. Integrated tools also improve visibility and alignment between teams on a shared mission.

Thoughtful incorporation of digital engineering tools unlocks enormous efficiency and collaboration benefits for AI development across large, decentralized organizations. The gains are realized in accelerated innovation, reduced costs, improved quality, and ultimately, more impactful and valuable AI solutions.

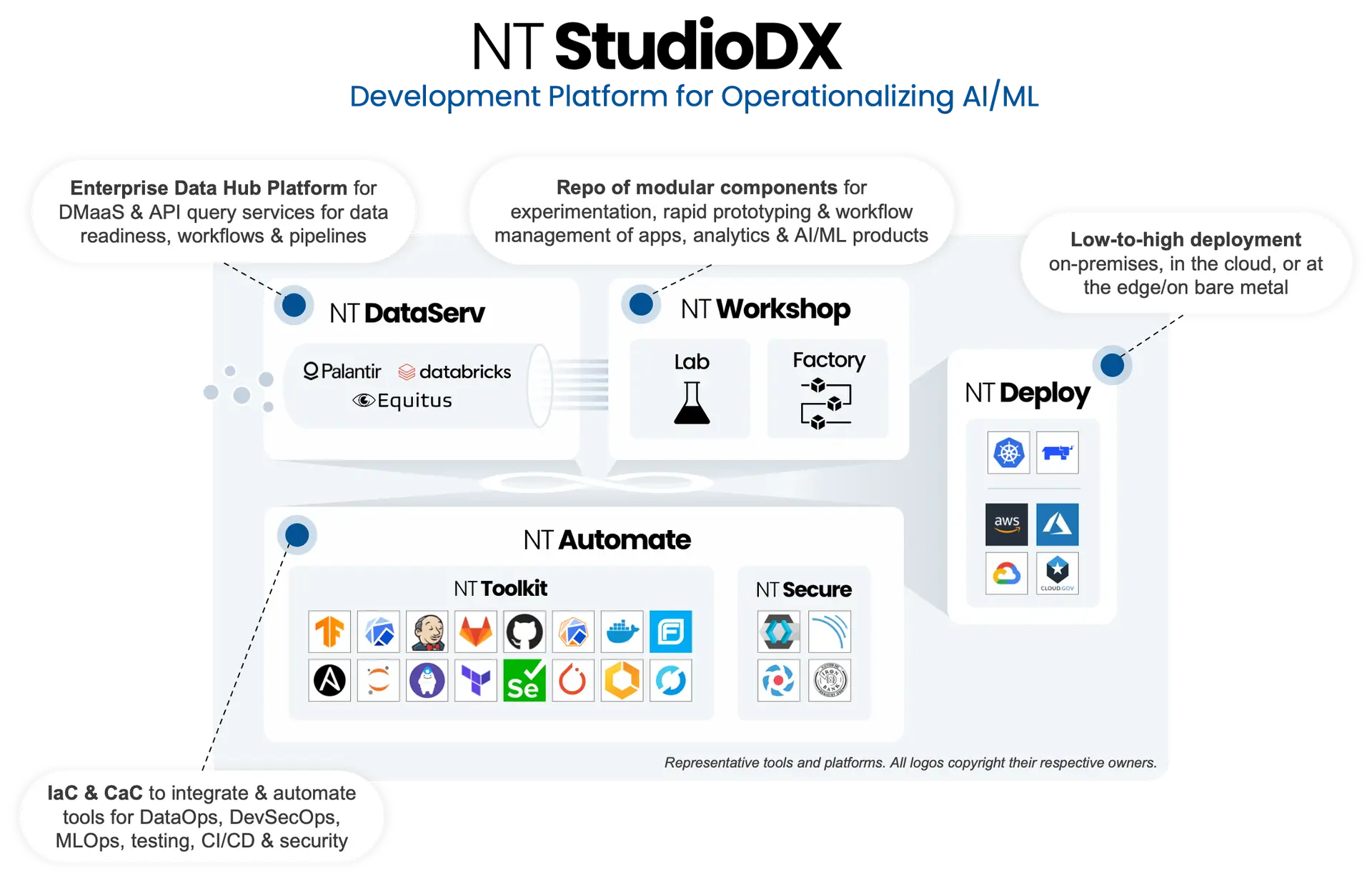

If your organization needs an isolated development environment for high compliance requirements, NT StudioDX is a license-free, modular AI development platform filled with plug-and-play digital engineering tools. NT StudioDX uses industry best practices through automation and stateful management of configurations, and provides the development guardrails to safely and securely build microservice applications and AI software agents.

Nicholas Chadwick

Cloud Migration & Adoption Technical Lead Nick Chadwick is obsessed with creating data-driven government enterprises. With an impressive certification stack (CompTIA A+, Network+, Security+, Cloud+, Cisco, Nutanix, Microsoft, GCP, AWS, and CISSP), Nick is our resident expert on cloud computing, data management, and cybersecurity.