NEXTOps℠

Proven solution delivery

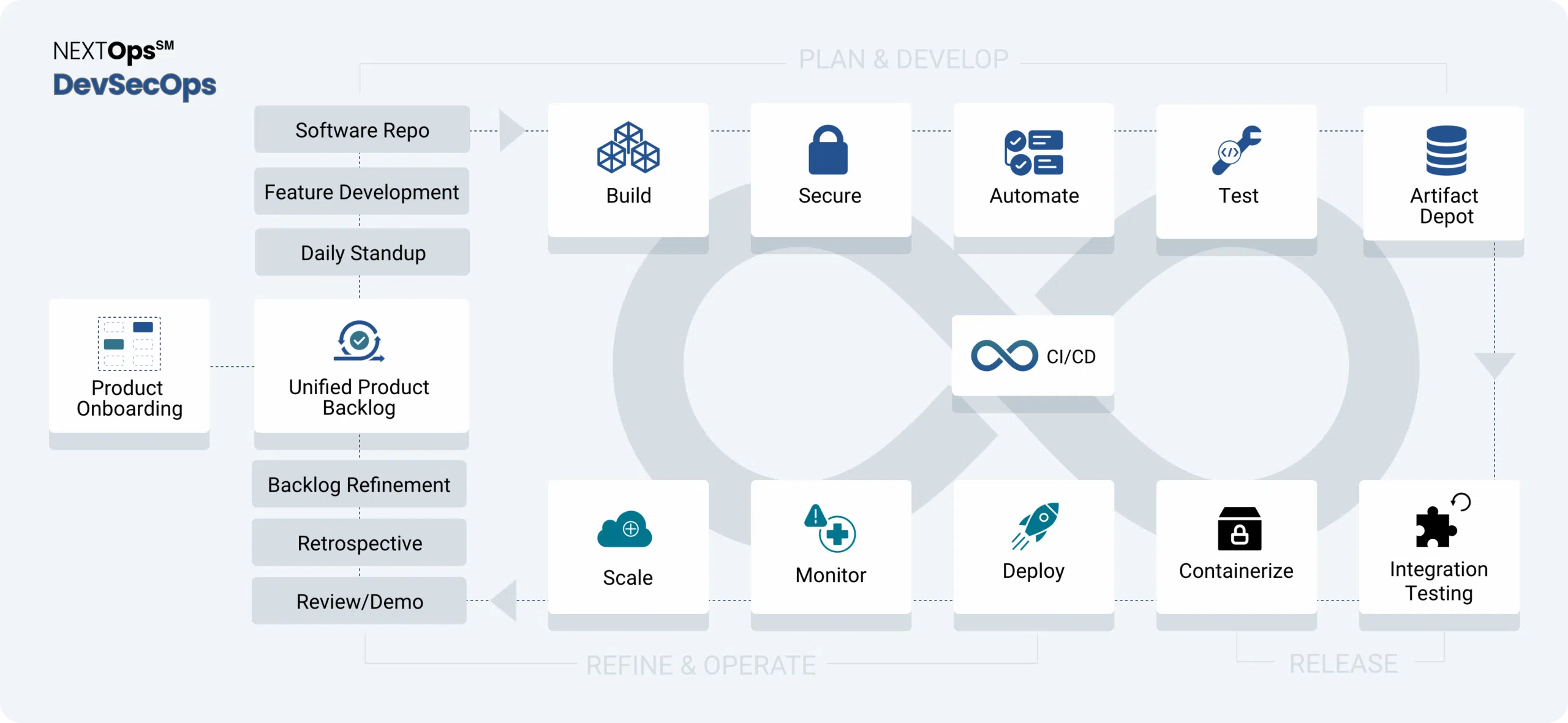

Our NEXTOps℠ framework is our proven agile solution delivery that drives collaboration, automation, data management, security, software development, data science, deployment, and operations for mission readiness and decision advantage.

NEXTOps℠

Orchestrates

Supports the beginning-to-end orchestration of data, process, security, tools, code, environments, and teams

Automates

Enables

Delivers

Delivers plug-and-play, cross-functional development and deployment support

CI/CD

We integrate language-specific build tools into our CI/CD pipelines using Jenkins commit triggers or GitLab runners. Our DevSecOps Engineers configure and tune build tools for Python packages, Java JAR packages, and .Net Core apps (to name a few).

When combined with automated testing and iterative, incremental code delivery, the CI/CD pipelines enable cybersecurity agility and integrity through security audits and vulnerability assessments that can be performed quickly and effectively while staying compliant to maintain continuous Authority-to-Operate (cATO).

Product Onboarding

Custom-built per Product Team requirements, our CI/CD pipeline starts with product onboarding, including a DevSecOps and Agile overview to “show the way.”

Our product onboarding process is critical to the success of our playbook by establishing expectations, practices, and a shared understanding and language between team members. We understand that Agile does not happen without disciplined practices and embrace “waterfall, only you can prevent.”

Unified Product Backlog

Infrastructure-as-Code tools (IaC) such as Terraform, Ansible, Terragrunt, and Bash scripts create version controlled and idempotent stateful virtual infrastructure. Our DevSecOps and Platform Engineers code IaC and pipeline automation.

Build

We integrate language-specific build tools into our CI/CD pipelines using Jenkins commit triggers or GitLab runners. Our DevSecOps Engineers configure and tune build tools for Python packages, Java JAR packages, and .Net Core apps (to name a few).

Secure

Automate

Infrastructure-as-Code tools (IaC) such as Terraform, Ansible, Terragrunt, and Bash scripts create version controlled and idempotent stateful virtual infrastructure. Our DevSecOps and Platform Engineers code IaC and pipeline automation. Automation is our major strength for repeatable and rapid DevSecOps.

Test

Our DevSecOps Engineers and Developer Support team integrate automated testing toolkits into CI/CD pipelines. We use testing tools that are well-documented and supported with Jenkins plugins or webhooks with SonarQube. For Unit Testing we use pytest for Python, JUnit for Java, JEST for JavaScript, and tanaguru for Section 508 & accessibility.

Artifact Depot

Our DevSecOps and Platform Engineers securely source and store artifacts including containers, libraries, binaries, and packages. We use Iron Bank for container images, private Nexus repositories, or JFrog Artifactory as required. We support supply-chain security and maintain a Software Bill of Material (SBOM). We configure FIPS-compliant transit and storage solutions for our DoD and Intel clients.

Integration Testing

We use automated integration testing tools where possible. Our DevSecOps Engineers configure Postman for API testing/mocking, Selenium for webapps, Cucumber for acceptance testing, and JMeter for Java performance measurements. We continuously strive towards Behavior Driven Development (BDD) practices to support early integration testing.

Containerize

Our Platform Engineers use Docker to edit container manifests for seamless and secure deployments. We harden/STIG Kubernetes platform services, load balancing, networking, and storage. We primarily use Rancher (RKE2) for government-compliant workloads, and have DoD experience with OpenShift, VMware Tanzu, and D2IQ.

Deploy

Our Platform Engineers and SREs configure Helm charts and Kubernetes Operators to deploy containers to Pods, update security policy, and expose services. We use Argo CD and the Kubernetes cluster to pull approved releases from the authorized git repository to the production cluster. We use Ansible or Chef when requested for on-premises, hybrid cloud, or compliance. Knative when requested.

Monitor

Our Platform Engineers and SREs use monitoring services such as EFK/ELK, AWS CloudWatch, Splunk, and Istio. We develop alerts, triggers, thresholds, and autoscaling tied to log events and performance metrics. We configure log and event forwarding for Cybersecurity Service Provider(CSSP), GitOps tools, and MatterMost integrations.

Scale

Our Cloud Architects, SREs, and Platform Engineers use Cloud Service Providers and hypervisors to configure service, instance, pod, and storage autoscaling. We use AWS GovCloud, C2E, Azure, GCP, and on-premise APIs to automate scaling operations via monitoring services. We have cleared, cloud-certified personnel for DoDI 8570.01-M compliance.

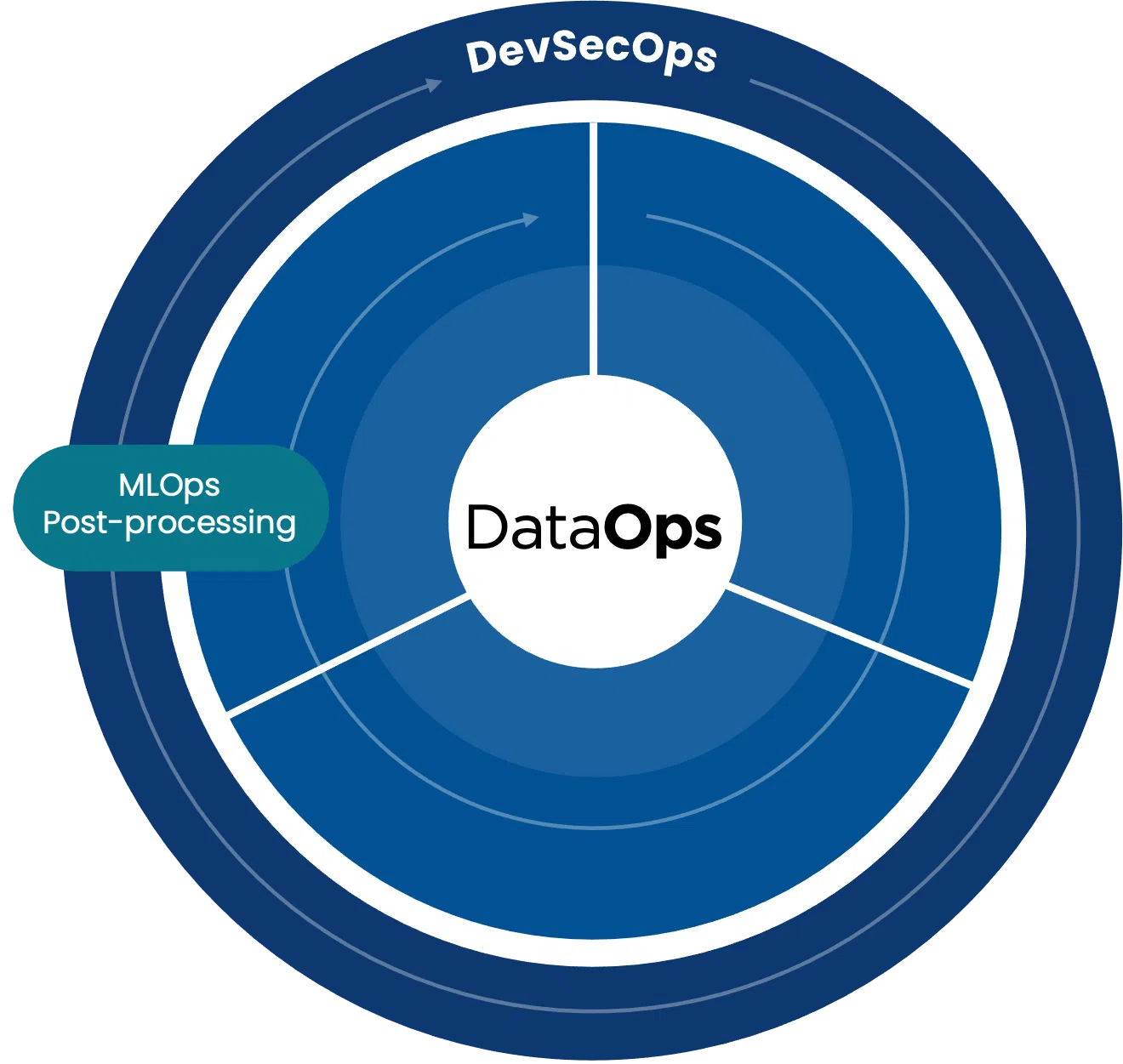

EXPLORATORY

- Data Identification: What data contain relevant info?

- Schema Discovery: Is there an existing schema?

- Frequency: When is the data needed?

- Access: How is the data accessed?

PROCESSING

- Schema: Target schema identification

- Ingest: ETL, ELT, wrangling

- Quality: Is the data model comprehensive, complete, and accurate?

PRODUCTION

- Storage: Data Lake

- Governance: Data tracking and logging

- Delivery: MLOps Post-processing

- Access: API endpoints for query, analytics, and visualization

Get data ready & automate with DataOps.

Data is the foundational element of advanced analytics, such as machine learning. Our DataOps pipeline iteratively enhances data quality, literacy, and governance.

DataOps creates an automated and continuous trusted data flow for DevSecOps and MLOps ingestion and use. As a result, government stakeholders, data stewards, and users work closely with DevSecOps engineers to build data pre-processing and storage architectures that readily support retrieval, visualization, analytics, and broader use across mission enterprise applications.

Operationalize your AI products with MLOps.

MLOps leverages DevSecOps and DataOps processes at every step: consuming the data, pre-processing, model training, and serving pipelines. With MLOps, model development is iteratively run and output continuously flows through the DevSecOps process for consistent deployments and maintenance.

Our MLOps teams follow best practices, including containerize-first, vertical, and horizontal scaling using GPUs, model source control, automation, and standardized development and consistent deployment environments to deliver and maintain scalable software-based AI/ML solutions.

EXPLORATORY

What problem are trying to solve, what data are available to solve it, and what are the candidate approaches?- Project Discovery

- Data Discovery

- Feasibility Study

- Candidate Model Selection

- Data Ops Preprocessing Pipeline

Model Development

Environment preparation, hyper-parameter tuning, testing, validation iterations.- Feature Engineering

- Model Training & Tuning

- Model Evaluation & Validation

- DataOps Prediction Pipeline

PRODUCTION

Insert model into production, visualize results, create APIs and endpoints for access.- Inference

- DataOps Post-processing

- Visualization & Deployment

NEXTOps℠ is part of our NT Playbook, which guarantees Expertise as a Service.

NT Experts

- Scalable cross-functional teams of cleared SMEs, Scrum Masters, data engineers & scientists, ML engineers, DevSecOps engineers, software developers & analysts

- Data scientists trained to integrate with DevSecOps teams to write effective code (or partner with a programmer to learn)

- Certified, cleared, right-sized teams to optimize delivery velocity & capacity

NEXTOps

- Agile practices orchestrate teams for frictionless DevSecOps, DataOps & MLOps

- Unique Scrum + Kanban approach that works for BOTH developers & data scientists to synch delivery velocity in larger teams

- Continuous improvement & automation-driven approach

- Methods & tools to help satisfy ethical AI requirements

NT3X

- Rapid prototyping approach that shortens the idea to execution gap

- Leveraging our NextOps, enables MVM/P/CR delivery in less than 6 months*

- Design thinking principles integrated to elicit stakeholder feedback early & iteratively

Introducing

NT StudioDX

A portable, scalable platform of 100% open-source tools and services to rapidly prototype and deploy software, analytic, and ML products—anywhere, with any type of data.

100% open source. Infrastructure agnostic. Built for classified networks. Vendor agnostic. Containerized & portable. Edge deployable.